“Artificial Intelligence is teaching machines how to think; Quantum Computing is giving them a new way to reason.

– Matthew D. Ferrante, CDO, CISO, CITANEX

Together, they won’t just solve problems faster—they’ll solve problems we never thought computable.”

45,311 Active Engagements

Introduction

Quantum computing and artificial intelligence are two of the most transformative technologies driving innovation today—but they’re often misunderstood or mistakenly grouped together. While AI uses traditional computing to learn from data and simulate human intelligence, quantum computing leverages the laws of quantum physics to process vast possibilities at once.

For executives and professionals navigating the future of tech strategy, understanding the distinction between these two fields is critical. In this article, we unpack the core differences, explore recent breakthroughs, highlight geopolitical investments, and examine the strategic dependencies every decision-maker should know. Through clear explanations and real-world analogies, you’ll walk away with actionable insight—not just technical jargon.

Curious how quantum computing might impact cybersecurity? Read our related article here.

What is Quantum Computing?

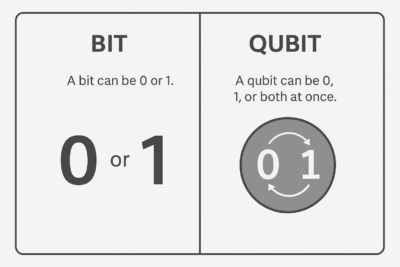

Quantum computing is a revolutionary form of computation that uses qubits1 (quantum bits) instead of classical bits. Unlike classical bits, which are either 0 or 1, qubits can exist in multiple states simultaneously through a property called superposition2. Combined with entanglement, this allows quantum computers to solve certain complex problems much faster than classical computers.

Analogy: Imagine a light switch. A classical bit is either ON or OFF. A qubit, however, is like a dimmer that can exist in multiple brightness states at once until observed.

What Is Artificial Intelligence?

Artificial intelligence (AI) refers to systems that simulate human cognition to perform tasks like recognizing speech, making decisions, or predicting outcomes. AI algorithms rely on massive datasets and pattern recognition, but they run on traditional, binary-based computers.

Analogy: Think of AI as a chef that follows complex recipes (algorithms) using a conventional kitchen (classical computer). Quantum computing is like giving that chef a futuristic kitchen with tools that can prepare multiple recipes at once.

Key Differences Between Quantum Computing and AI

| Feature | Quantum Computing | Artificial Intelligence |

|---|---|---|

| Core Technology | Qubits, Superposition, Entanglement3 | Algorithms4, Neural Networks5 |

| Processing Type | Parallel6, probabilistic7 | Serial8 or parallel9 (classical) |

| Current Maturity | Early-stage, experimental | Commercially deployed |

| Use Cases | Cryptography10, simulation, optimization | Image recognition, natural language11, automation |

| Hardware | Requires specialized environments | Runs on traditional hardware |

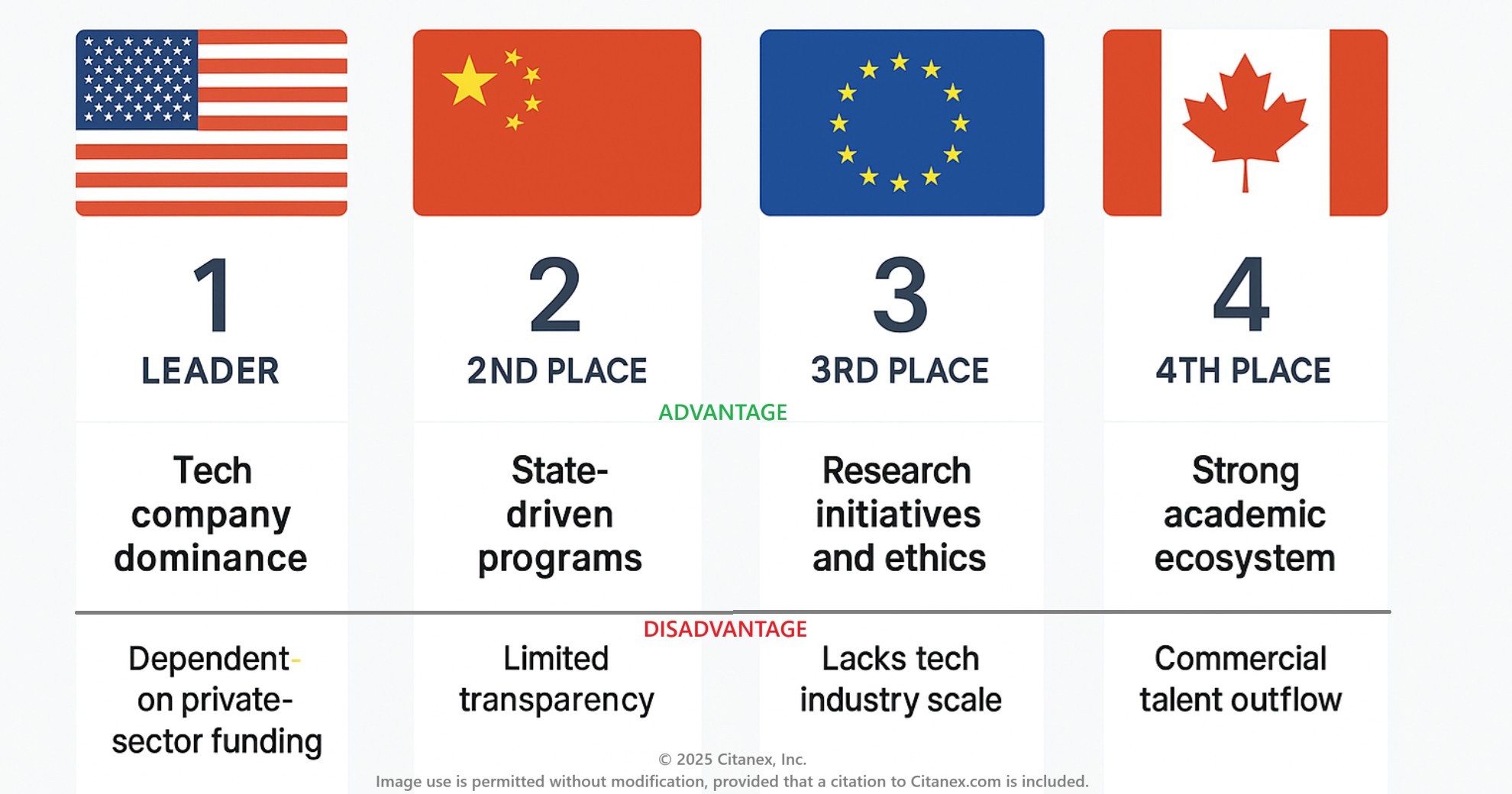

The Geopolitical Race: Who’s Leading in Quantum and AI?

The race for quantum and AI dominance is as much about national security and economic power as it is about technology. Leading countries include:

United States

- Major investments through Defense Advanced Research Projects Agency (DARPA)12, National Science Foundation (NSF)13, and Department of Energy (DOE)14.

- Home to Google, IBM, Microsoft, and OpenAI.

- Executive orders on quantum strategy and AI governance.

China

- Aggressively funding quantum research through its 14th Five-Year Plan.

- Built the world’s first quantum satellite (Micius).

- Strong state-sponsored AI surveillance and automation programs.

European Union

- EU Quantum Flagship: €1B initiative for quantum research.

- Ethical AI frameworks and regulatory leadership (e.g., AI Act).

Canada

- Known for early AI pioneers and quantum computing startups (D-Wave, Xanadu).

- Government support for both AI and quantum research hubs.

Other Key Players

United Kingdom, Israel, Australia, and South Korea are also ramping up national quantum and AI strategies.

This global competition is not only about technological progress, but about setting the standards and ethical frameworks that will govern these innovations.

Core Dependencies for Quantum Computing and Artificial Intelligence

Both quantum computing and AI rely on a complex web of infrastructure, talent, and environmental conditions. Understanding these dependencies is critical to their scalability and long-term impact. Shared and Distinct Dependencies include:

- Energy Production:

- Quantum computers need stable energy.

- AI systems require significant energy for training and data centers.

- Advanced Materials and Hardware:

- Quantum systems need superconducting materials and ion traps.

- AI relies on high-performance GPUs and custom silicon.

- Data Infrastructure:

- Quantum computing requires high-fidelity transmission.

- AI thrives on large datasets and cloud infrastructures.

- Skilled Workforce:

- Both technologies demand interdisciplinary experts in physics, computer science, math, and engineering.

- Government & Industry Investment:

- R&D and public-private partnerships are essential for advancement.

These dependencies highlight why innovation in these areas often clusters in tech-forward nations with strong academic, industrial, and policy support.

Recent Breakthroughs in Quantum Computing

- Microsoft’s Majorana 1 Chip (2025): Uses topological qubits for better stability and error resistance.

- Google’s Willow Processor: Demonstrated quantum advantage with breakthrough error correction.

- D-Wave Systems: Claimed quantum supremacy in solving material simulation problems.

- Cisco’s Quantum Networking Chip: Paves the way for quantum internet infrastructure.

Final Thoughts

Quantum computing and artificial intelligence are distinct, complementary technologies poised to redefine industries and international power structures. Understanding the differences between them is the first step toward grasping their potential and preparing for their impact.

As the geopolitical race heats up and technological milestones accelerate, one thing is clear: we are entering a new era of computing where yesterday’s limits may no longer apply.

Need the latest technology and strategic insights for a competitive edge? Contact Citanex today!

Related Articles

Sources & Methodology

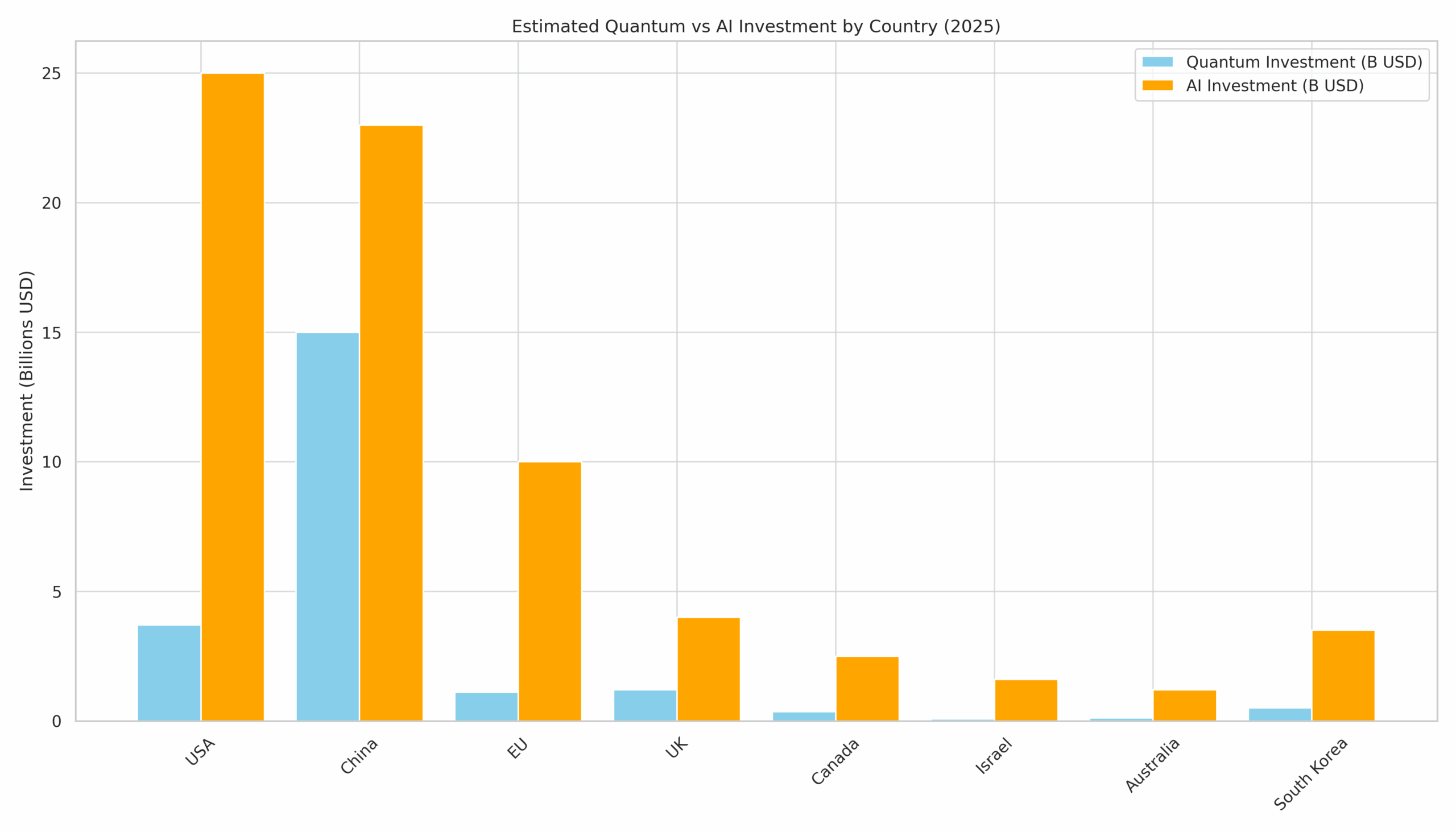

The data visualizations and rankings presented in this article are based on publicly available information as of 2025, drawn from a combination of government reports, global AI indices, research programs, and investment announcements. All figures are best estimates and should be interpreted as directional rather than exact.

🔹 Quantum & AI Investment Estimates

- USA: National Quantum Initiative Act, DARPA, NSF, DOE; private-sector data from OpenAI, IBM, Microsoft.

- China: State Council, 14th Five-Year Plan, Micius satellite program, Tsinghua University AI report.

- EU: EU Quantum Flagship (€1B), Horizon Europe, AI Act documentation.

- UK: National Quantum Technologies Programme, UK AI Roadmap.

- Canada: CIFAR, Pan-Canadian AI Strategy, National Quantum Strategy.

- Israel: Israel Innovation Authority, Ministry of Defense, MIT Technology Review.

- Australia: CSIRO, National AI and Quantum Initiatives.

- South Korea: Ministry of Science and ICT, AI chip programs.

- India (contextual): NITI Aayog AI Strategy, National Quantum Mission (2023).

🔹 Ranking Benchmarks & Indices

- Tortoise Intelligence – Global AI Index

- Stanford University – AI Index Report

- Oxford Insights – Government AI Readiness Index

All visualizations and summaries © 2025 Citanex, Inc.

Image and chart usage is permitted without modification, provided that citation to Citanex.com is included.

Additional Sources

Google Quantum AI – Announcing the Google Willow quantum processor

https://blog.google/technology/research/google-willow-quantum-chip/

Microsoft Research – Microsoft introduces the Majorana 1 quantum chip

https://news.microsoft.com/source/features/innovation/microsofts-majorana-1-chip-carves-new-path-for-quantum-computing/

D-Wave Systems – Demonstration of quantum advantage in material simulation

https://www.hpcwire.com/off-the-wire/d-wave-reports-quantum-advantage-in-materials-simulation-study/

Cisco Systems – Quantum Networking Lab and chip prototype launch (Reuters)

https://www.reuters.com/business/media-telecom/cisco-shows-quantum-networking-chip-opens-new-lab-2025-05-06/

U.S. National Quantum Initiative

https://www.quantum.gov/

China’s 14th Five-Year Plan for Science and Technology – Summary via The Diplomat

https://thediplomat.com/2025/10/chinas-next-five-year-plan-will-bet-on-technology-at-home-and-abroad/

European Union Quantum Flagship Program

https://qt.eu/

European Commission – AI Act Overview

https://artificialintelligenceact.eu/

Canada’s National Quantum Strategy – Government of Canada

https://ised-isde.canada.ca/site/national-quantum-strategy/en

OpenAI – Artificial Intelligence and Machine Learning Infrastructure

https://openai.com/research

OECD AI Policy Observatory – AI Global Investment & Trends

https://oecd.ai/

Nature & Scientific American – Comparative articles on Quantum vs. AI capabilities and dependencies | Quantum Computers Can Now Run Powerful AI That Works like the Brain

https://www.scientificamerican.com/article/quantum-computers-can-run-powerful-ai-that-works-like-the-brain/

Footnotes

- Qubit is the basic unit of information in quantum computing. Unlike a regular bit, which can be either 0 or 1, a qubit can exist as 0, 1, or both at the same time due to quantum superposition. ↩︎

- Superposition is a fundamental principle in quantum computing where a quantum bit (qubit) can exist in multiple states—both 0 and 1—at the same time, instead of being limited to just one like a regular computer bit. Imagine flipping a coin. A classical bit is like a coin showing heads or tails. A qubit in superposition is like a coin spinning in the air both heads and tails at once until you catch it ↩︎

- Entanglement is a quantum phenomenon where two or more qubits become linked so that the state of one instantly influences the state of the other—no matter how far apart they are. ↩︎

- Algorithm is a step-by-step set of instructions a computer follows to solve a problem or complete a task. Think of an algorithm like a cooking recipe—each step guides the process to achieve a specific result, whether it’s baking a cake or tying one’s shoes. ↩︎

- Neural network is a system of algorithms that learns from data by recognizing patterns—just like how our brains learn from experience. It’s commonly used in artificial intelligence tasks like image recognition, speech processing, and decision-making ↩︎

- Parallel processing in quantum computing refers to a quantum computer’s ability to explore many possible solutions at once using the principles of superposition and entanglement, rather than checking each possibility one by one like a classical computer. Quantum parallelism doesn’t mean we get all answers instantly—it means the system evaluates a probability space of outcomes at once, and clever algorithms (like Grover’s or Shor’s) help extract the right answer efficiently. ↩︎

- Probabilistic means something is based on likelihoods or chances, not certainties. Instead of giving a definite answer, it gives a range of possible outcomes, each with a certain probability. Rolling a die is a probabilistic event. You can’t predict the exact number you’ll get, but you know each number (1–6) has a 1 in 6 chance. ↩︎

- Serial processing means a computer completes one task at a time, step by step, in a specific sequence. Think of a single cashier checking out one customer at a time in a line. ↩︎

- Parallel processing (in classical computing) means a computer divides a task into smaller parts and processes multiple tasks at the same time, often using multiple processors or cores. Now imagine four cashiers serving customers simultaneously – much faster than one doing it alone. Parallel is faster than serial processing and more efficient for big tasks like simulations, image rendering, or AI model training. The key difference: classical parallelism = more hardware, more tasks at once. Quantum parallelism = more possibilities explored at once using quantum physics. ↩︎

- Cryptography is the practice of using codes and mathematical techniques to secure information—so only the intended person can read or access it. Think of cryptography like a lockbox for data-only someone with the correct key or password can unlock and read what’s inside. ↩︎

- Natural language refers to the way humans naturally speak or write—like English, Spanish, or Mandarin. In AI, it means teaching machines to understand, interpret, and generate human language. Imagine you’re teaching a robot to read a book or answer questions. Natural Language Processing (NLP) is what allows that robot to understand the words and respond like a person would. ↩︎

- Defense Advanced Research Projects Agency (DARPA) is a U.S. government agency under the Department of Defense that funds and develops advanced technologies for national security—including AI, robotics, and quantum computing. ↩︎

- National Science Foundation (NSF) is an independent U.S. agency that supports fundamental research and education in science and engineering. NSF is a major funder of academic AI and quantum computing research. ↩︎

- Department of Energy (DOE) is a U.S. federal department responsible for energy policy and nuclear safety. It also oversees national labs like Oak Ridge and Argonne, which play key roles in quantum computing and high-performance computing research. ↩︎

Recent Articles

- Messaging Apps Compared 2025: SMS, iMessage, RCS, Signal, & More

- Understanding Quantum Computing vs. Artificial Intelligence

- Quantum Computing: Friend or Foe to Global Society?

- COMPLACENCY: The Silent Threat – Why It Kills and Why It Matters

- Iran’s Cyber Warfare: Strategies and Global Defense